Tech Stack

- Ubuntu & ROCm (I have a AMD GPU)

- Ollama (Llama2 model)

- Svelte

- Google Takeout & Maps

- Yelp Fusion API

- NPM: wuzzy

- NPM: svelte-maplibre

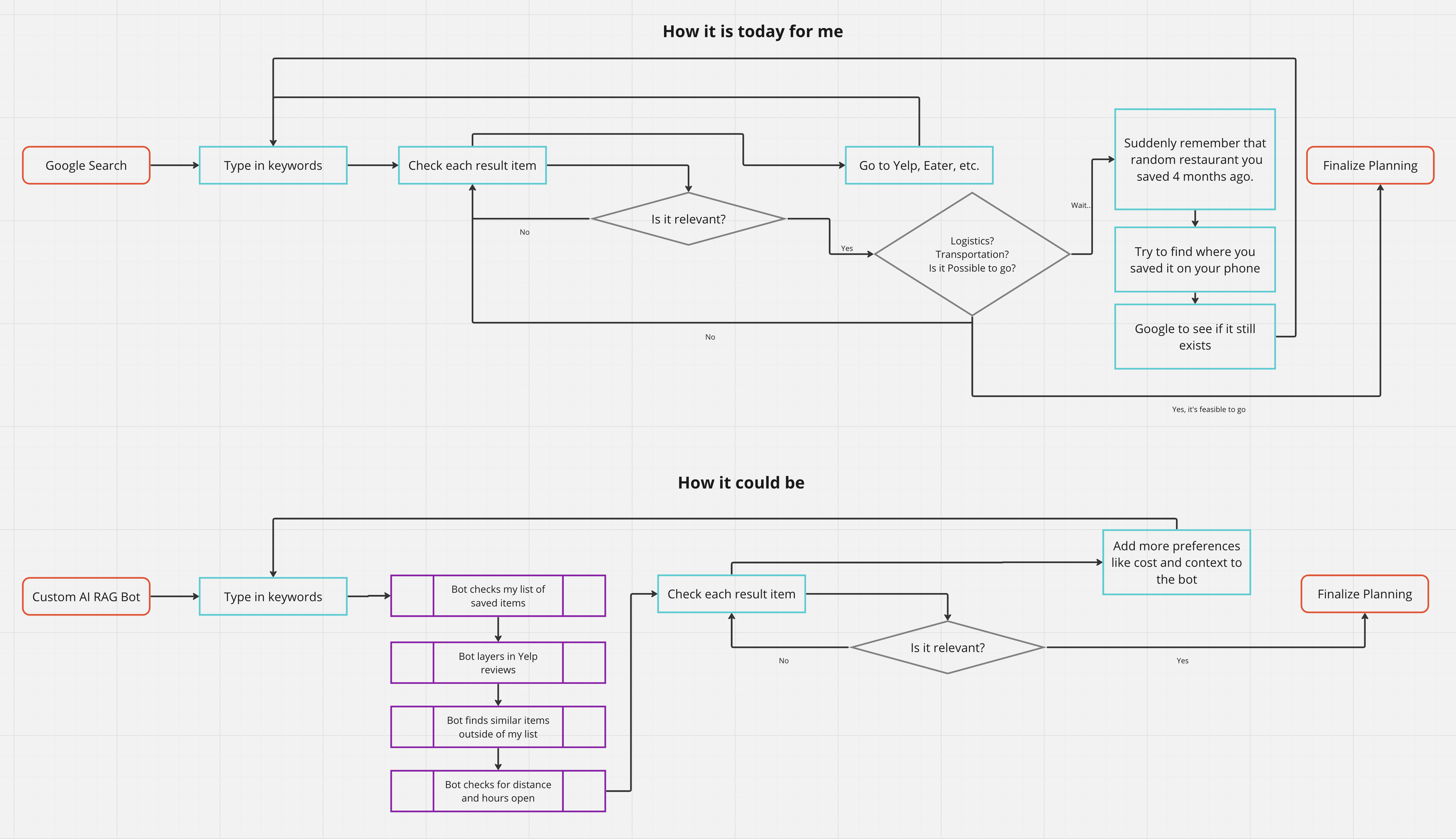

Using ChatGPT for a few months now, I found several great uses that substitute the need for a search engine. But the biggest limitation is the lack of my data inside those searches. There's a rush of startups and companies looking to fill that gap that promises to ingest spreadsheets, documents, and internal wikis.

But I wanted to experiment with a specific scenario: help me find a restaurant that's contextually relevant to the moment but also prioritize the things I saved before. There's a huge difference in what I would want depending on if it was a work lunch, a date night, or a 3 am craving.

Enter RAGs (or Retrieval-Augmented Generation) principles. At its most basic, you're just stuffing the chat prompt to the AI bot with your preferences beforehand. But there's a bit of prep beforehand to stuff it with the right data.

Huge lift by referencing Learn By Building AI's article.

For this proof-of-concept, I cached all the data I needed instead of making live API calls to Google and Yelp. So I downloaded a cache of my Google Maps data. I then ran a handful of saved locations against the Yelp Fusion database. I blended their data into my existing list namely price and recent reviews.

My PC is an AMD-build which is great for gaming but it's been lacking for AI testing. The drivers have just caught up for my GPU card, so I set up ROCm in Ubuntu so the AI models could leverage my GPU instead of my CPU.

Ollama is a great piece of software. It runs in the background as its own server so I can treat it like an API endpoint within my web app. I can even track the calls in the terminal itself.

I'm still testing Svelte as a framework for building websites. I still really like its easy syntax and layout. So I ran that app on a separate port and it could talk to Ollama all on one machine!

The first novel challenge was to actually look through my saved list for relevancy to the user's prompt before even sending it to the AI bot. Stuffing the prompt with 100s of entries didn't seem great.

What I found was a set of formulas that calculate the "distance" between any two words or phrases. In the way of how similar "cake" is to "cat" over "strawberry", it would give back a confidence number of how different the words are.

To get a sense of the location, I smashed the Yelp reviews together with the price and name of the business into a test string. I would then compare the user's prompt against that test string. If many words overlapped, it would get a higher score. This sorted the overall list of my saved places.

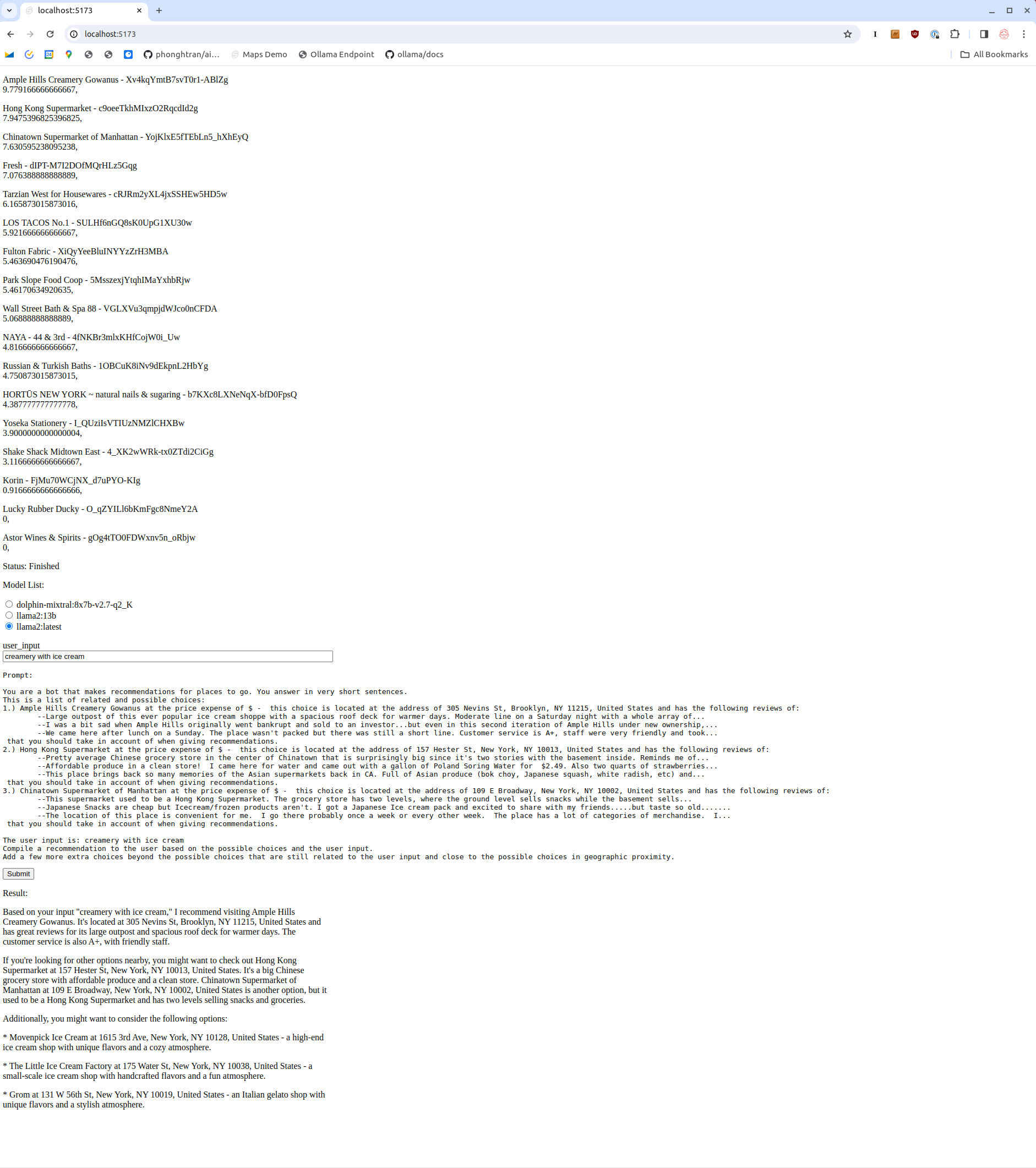

You can see in the screenshot that with the words "ice cream creamery," "Ample Hills Creamery" would rise to the top with a composite score of 9.77. But I would discover the crudeness of this as many of the "Hong Kong Supermarket" reviews used the words "came" or "nice." This would be close enough for the "Jaro-Winkler" distance formula and grant it points up to 7.94.

function compareWords(text1, text2, id) {

text1 = text1.toLowerCase();

text2 = text2.toLowerCase();

const testArray = text1.split(' ');

const strArray = text2.split(' ');

let jaro = 0;

for (var i = 0; i < testArray.length; i++) {

for (var j = 0; j < strArray.length; j++) {

const value =

testArray[i].length > 2 && strArray[j].length > 2

? wuzzy.jarowinkler(testArray[i], strArray[j])

: 0;

jaro += value > 0.7 ? value : 0;

}

}

return jaro;

}

Oddly straightforward after figuring out the string similarity between my saved places and the user prompt. Taking the top three closest locations, you can stuff that into the chat message with the user's prompt. The bot is very amendable in defining its personality and chat style.

prompt = 'You are a bot that makes recommendations for places to go. You answer in very short sentences.\n' + 'This is a list of related and possible choices:\n' + items + '\nThe user input is: ' + user_input + '\nCompile a recommendation to the user based on the possible choices and the user input. \n' + 'Add a few more extra choices beyond the possible choices that are still related to the user input ' + 'and close to the possible choices in geographic proximity.';

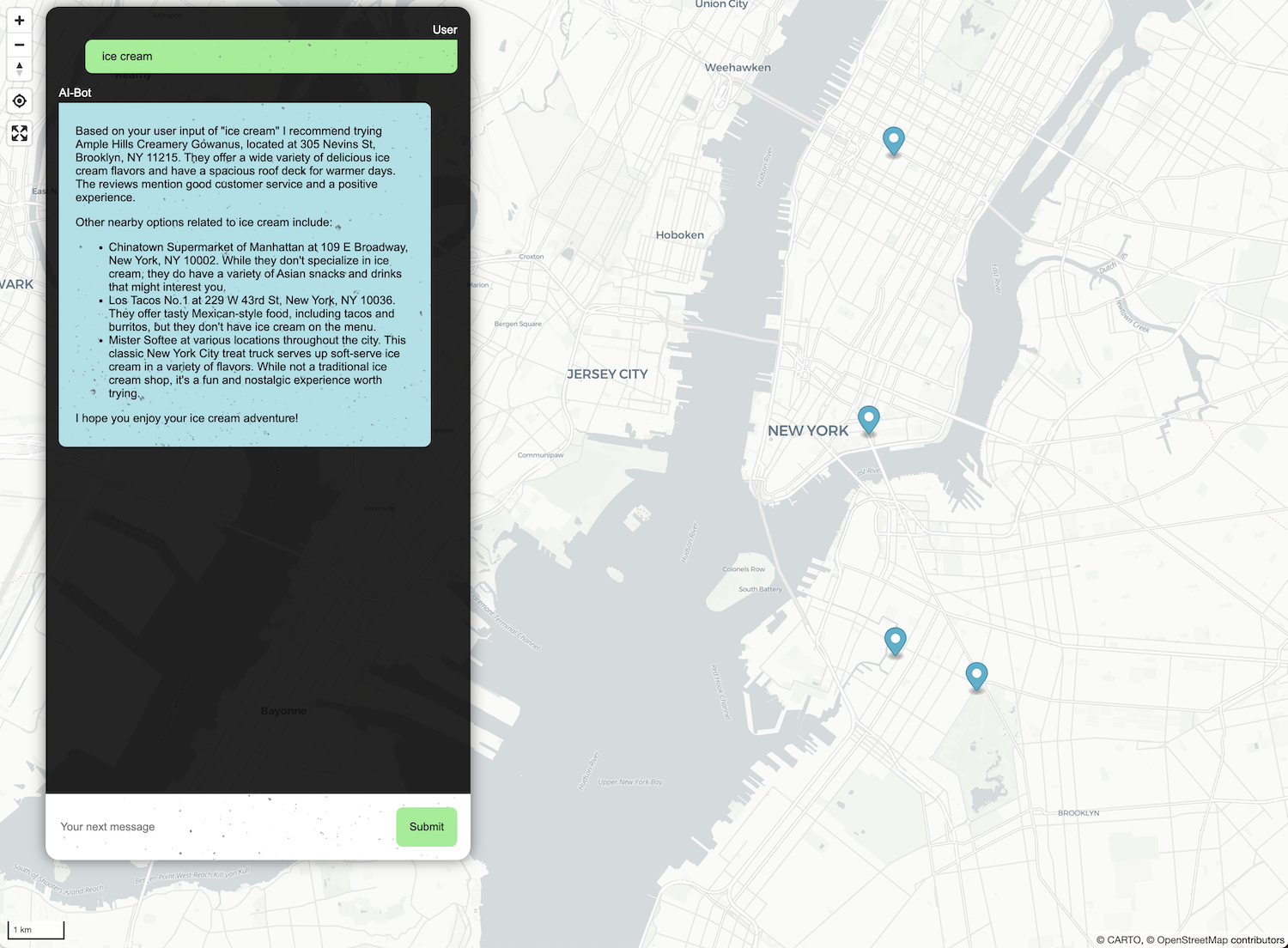

From there, you can send that prompt to Ollama's server to get back a response. Then reverse-engineer the recommendations to populate the map with markers.

A fascinating quirk is how the model will try to rationalize locations that weren't very relevant but because I told it to prioritize it:

There are plenty of smoke and mirrors for this proof-of-concept, but some follow-up experiments come to mind: